TAO - Meta's scalable architecture powering world's largest social graph

TAO's architecture, design decisions and trade-offs

Meta (formerly Facebook) has more than 2 billion Daily Active Users (DAU). The platform generates millions of posts, images, videos, etc everyday.

The system needs to generate the content for every active user. Every user’s content is unique due to restrictions and privacy checks.

Friendships, checkins, comments, reactions etc result in a dynamic social graph. The platform must handle the large scale and deliver the user content within fraction of seconds.

Meta developed TAO - a geographically distributed graph data abstraction to power the largest social graph. TAO is optimized to handle Facebook’s billions of reads and millions of write requests daily.

In this article, we will go over Facebook’s original architecture and its limitations. We will then walkthrough how the architecture evolved into TAO. We will learn how TAO’s architecture solved the problem through its robust design. And conclude by going over TAO’s performance on production workloads.

Problem Statement

Imagine a Facebook user named Jimmy checkins into a hotel and tags his friends Bob and Jim. This post lands into his friend’s feed. Later, several friends would like the post and few would comment.

This would go on for a couple of hours or days until Jimmy creates another post in near future.

Did you notice what just happened ?

In short - A user created a post, it got some traction and engagement. The view of the post kept changing as the reactions and comments kept flowing. In a few days, the engagement faded and user created another post. And the cycle continued.

One more observation is that the post’s view differs for every user. For eg:- If the post is public, a non-friend user may not be able to comment. Similarly, due to restrictions one or more friends might not comment or share.

A single post data looks like a graph of inter-connected nodes. If you extend it to all Facebook users, it becomes a social graph. The social graph is dynamic as it changes every second due to user’s engagement on the post.

To summarize, this is the core problem that Facebook needs to solve :

Problem - Generate customized user content by applying privacy checks and filters on ever changing social graph. Moreover, develop a resilient, fault-tolerant and a performant system to handle increasing load from billion users.

Let’s now go over the original architecture that solved this problem.

Original Architecture

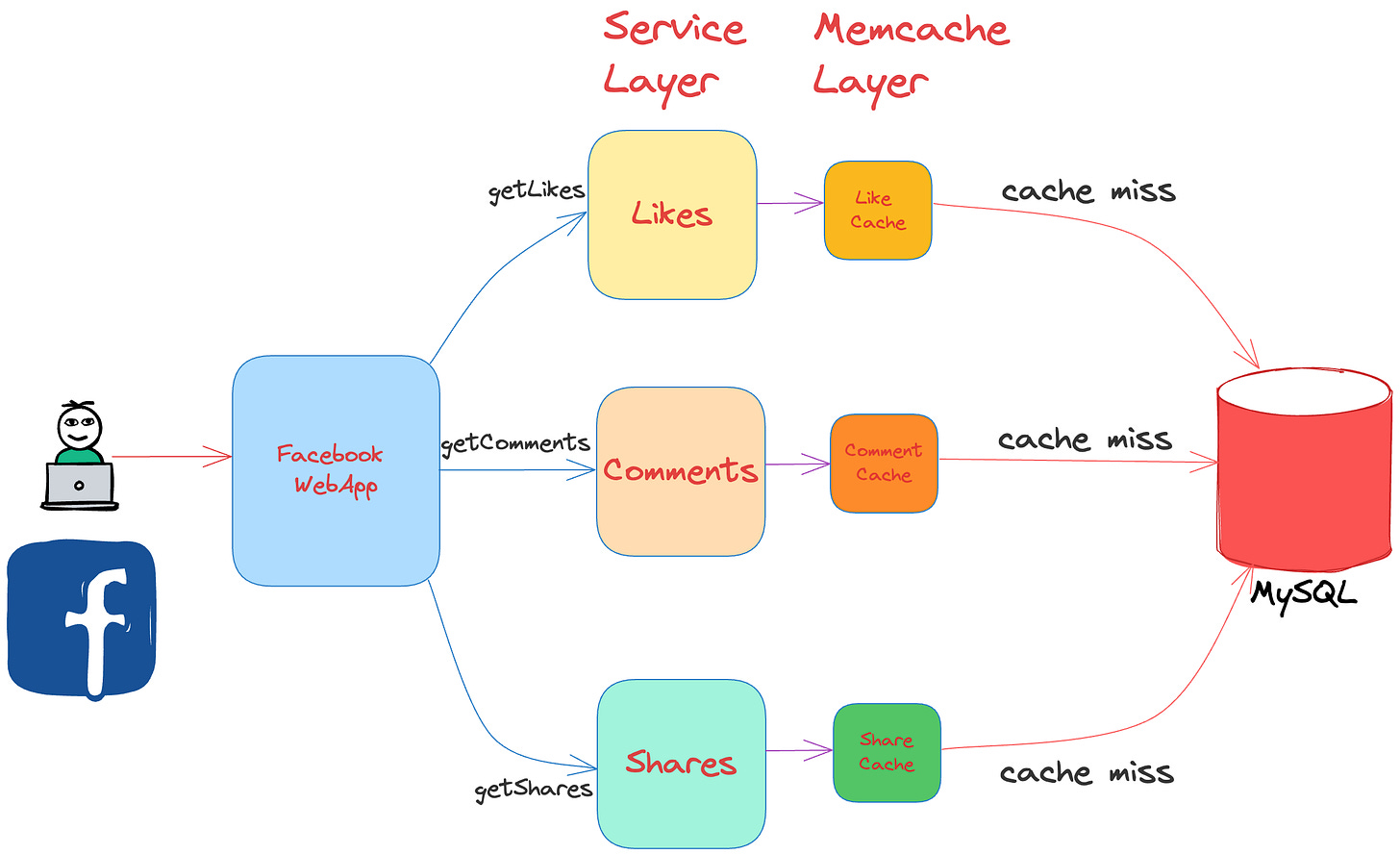

In the beginning, Facebook adopted a PHP-MySQL stack to render the social graph. They used Memecache as a look-aside cache to accelerate the performance.

Here’s how the architecture looked like :

The WebApp layer was an aggregation layer. It fetched the data from different upstream services for likes, comments, shares, post data etc. Each service used Memcache to speed up reads.

Before reading further, take a moment to think what could be the potential downsides of this approach ?

The original architecture had the following limitations :-

Distributed control logic - The logic was spread across different services which increased failure modes. For eg:- Thundering Herd on MySQL in case of a cache miss.

Inefficient access pattern - The queries need to fetch the list of different associations (comments, reactions, etc). Also, it must handle concurrent updates to a cached list.

Expensive read-after-write consistency - For high availability, the system used asynchronous master/slave replication. Invalidating cache in replica was challenging. As a result, it was expensive to achieve read-after-write or strong consistency.

Now, that you understand the original architecture and its limitations, let’s now focus on how Meta solved it.

TAO - Graph Data abstraction

TAO was developed as a service which would expose a graph to the clients. For eg:- Given a post’s identifier, it would aggregate and return all the data to the WebApp layer.

It didn’t allow the clients to directly access the MySQL database. Clients would use TAO’s APIs to fetch data they needed.

TAO provided the following two data models :-

Object - An object represented data entity such as a user, post, or a comment. Each object was uniquely identified by a 64-bit field.

Associations - An association was either a relationship between two objects or action taken by one object on another. Each association had a type indicating relationship like LikedBy, FriendOf, PostedBy, etc.

APIs

Object APIs - These APIs were used to create, update and fetch the object data.

Association APIs - These APIs were useful for defining relationship between the objects. Further, they provided several variations such as :-

assoc_get - Fetch all associations between two objects.

assoc_count - Count of associations. Useful to get count of comments or likes.

assoc_range - To fetch a range of associations using pagination.

assoc_tim_range - Fetch associations within the time constraints

Let’s now understand the internal architecture that powered these APIs and data models.

TAO Architecture

TAO’s design used a layered architecture approach. Each layer had a different responsibility i.e caching or data storage.

TAO used replication for high availability and failover. Further, it scaled geographically by maintaining a copy of social graph in every region.

Let’s go over each layer and understand the design decision.

Storage layer

TAO stored the object and association data in MySQL servers. The data was sharded by using consistent hashing.

Every TAO API mapped to a SQL query at the lowest level. It leveraged MySQL’s indexes to speed up lookup of range queries for association APIs.

Design decisions

MySQL - Although a NoSQL database like LevelDB could have worked, MySQL was chosen for non-user-facing functions like bulk imports, replica creation, async replication, and atomic transactions.

Consistent hashing - This ensured uniform load distribution and simplified request routing.

Caching layer

The caching layer served the TAO’s APIs. Every server in this layer cached the data from the storage layer using LRU (Least Recently Used) policy.

Every server hosted cached data for multiple shards. In other words, each database shard mapped to a single cache server.

The caching servers communicated with each other and understood the semantics of the data. For eg:- if the association count was 0, then caching server wouldn’t query the associations.

The cache layer was further divided into two :

Leader tier - Servers in this tier handled all database interactions.

Follower tier - Clients interacted with this tier. In case of cache misses, requests were forwarded to the leader tier.

There was a single leader tier and several follower tiers. Additional follower tiers could be added to handle the increasing load.

Design decisions

Single Tier vs Leader-Follower tiers - A single large tier is prone to hot spots. The Leader-Follower architecture spreads the load evenly.

Replication

TAO used multi-region asynchronous replication. Data was replicated from a master region to a slave region, maintaining cache consistency through invalidation messages.

Write flow

Followers directed writes to leaders. If a leader was a slave, writes were redirected to the master’s leader. Data was updated synchronously across tiers, emitting invalidation messages for cache consistency.

The below diagram illustrates how a follower tier in Slave handles a write.

Read flow

Reads were performed on followers in a region, ensuring data was served locally for performance.

The below diagram shows how reads are served locally in the same region.

Design decisions

Cache invalidation - To ensure cache consistency in master-slave region, invalidation messages were sent along with the replication stream. Caching servers used the invalidation messages for evicting or updating the entries.

Bottlenecks, Optimizations and Failovers

Hot spots

It was common for a post to become viral. As a result, a given shard would receive a large number of requests. This would make the shard a hot spot.

Solution -

Shard cloning - The shard was cloned and replicated on several servers in a follower tier. This technique rebalanced the load.

Client caching - The server returned the access rate for the object. In case the rate exceeded a pre-defined threshold, the client cached the data. This reduced the load on the servers.

High-degree objects

A limit of 6000 was imposed on the association list response returned by TAO. However, many objects had more than 6000 associations. For eg:- celebrities getting millions of comments.

Further, it was common for many objects to have 0 associations. And TAO would get assoc_get requests.

In case of a cache miss, this would result in large number of database queries.

Solution -

Association count - In case the count is 0, the query would directly return instead of checking the other objects in the database.

Application semantics - They leveraged the data such as creation time of the objects and the associations and limited the search of associations.

Consistency

TAO provided read-after-write consistency in the absence of failures. This was applicable for any client writing to and reading from the same follower tier.

The design preferred availability over strong consistency. In case of failures, the data was eventually consistent.

Hence, the clients had to tolerate some amount of data staleness. This was a fair trade-off given the load that the system had to serve.

Strong consistency

TAO provided strong consistency to a subset of APIs such as authentication. These APIs were marked as critical, guaranteed strong consistency at the cost of increased latency.

Failovers

TAO had to handle several failure scenarios such as :

Master database failure - Slave’s database was elected as the new master. All the cache-misses and writes were directed to the newly elected master.

Leader failures - Followers sent the reads to the database. While writes were sent to a random leader in the tier.

Follower failures - Every follower tier had a backup. In case of server timeouts, the clients sent request to the backup tier.

Performance

Here’s how TAO performed on Facebook’s production workload :-

Availability - 4.9 * 10^-6 queries failed over a period of 90 days.

Hit rate - The overall hit rate was 96.4% for read queries.

Failover - Slave was promoted to master 0.25% times due to database failures, upgrades or maintenance.

Replication lag - p99 for slave database lag was 3 seconds.

Conclusion

Meta faced a challenging problem while customized content for each user using its dynamic social graph. Initially, they used PHP stack, fetched data from MySQL and used Memcache as a look-aside cache.

Meta developed TAO as a graph data abstraction layer. It solved several downsides of original look-aside cache architecture.

Through its layered architecture, it scaled to handled billions of reads and millions of writes. Further, it favoured high availability over strong consistency.

TAO solved common problems in distributed systems such as hot spots, thundering herd and automatic failover. They adopted robust mechanisms like shard cloning and caching to build a resilient system.

If you have reached here, then here are few points to ponder :-

Do you think a graph database like Neo4j would have worked instead of TAO ?

How do other social media websites like LinkedIn, Twitter, Threads, etc manage graph data ?

Share your thoughts on the above points in the comments.

Reference - Facebook TAO

Before you go:

❤️ the story and follow the newsletter for more such articles

Your support helps keep this newsletter free and fuels future content. Consider a small donation to show your appreciation here - Paypal Donate

Great article