Unraveling the Internals of Video Streaming services

A comprehensive guide to key concepts, terminologies and working of Video Streaming apps

The video streaming industry has grown rapidly over the last decade and now makes up 82.5% of global internet traffic. It’s not surprising that we spend the majority of our time watching Instagram reels or Youtube shorts.

Recently, tech companies have started asking video streaming design problems in system design interviews. Candidates often struggle due to a lack of experience in this area.

The best way to learn about video streaming is to build a strong foundation, understand the complex process, and go over real-world case studies.

This article simplifies complex concepts with examples and illustrations. Whether you're preparing for system design interviews or learning about video streaming, it covers key terms and the basics of video processing.

Let’s start our journey by learning the core concepts!

Core Concepts

While recording a video, camera clicks a stream of pictures in quick succession. So, a video file is just a continuous sequence of images.

When you play a video, it displays the clicked pictures and our human brain perceives it as a motion picture.

Frame

In the context of a video, the clicked picture or an image is known as a frame. A camera generally clicks 30-60 frames per second.

The higher the number of frames, the better the video quality. Lower frames per second hinder the smoothness of a video.

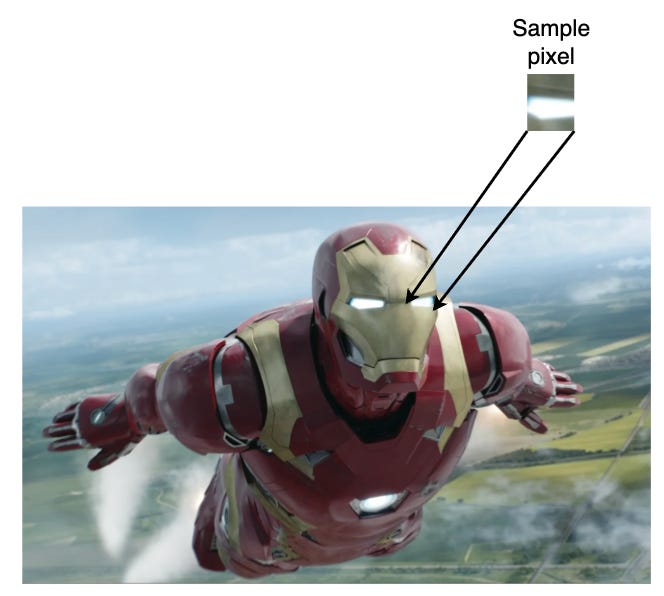

Pixel

Each frame can be broken down into atomic units of equal size known as a pixel. A pixel is a square-like portion that can encode the colour of an image.

A pixel’s colour can be computed by mixing R (Red), Green (G) and Blue (B) colours. In computing, each of R, G, and B is known as a channel.

A channel can be allocated certain bits to encode the colour information. For eg:- Normal videos use 8 bits for R, G, and B resulting in 24 bits/pixel. Higher definition videos use 16 bits for each channel (48 bits/pixel).

Bitrate

Bitrate is the amount of data used to encode a video stream per unit time. It’s measured in bits/sec or kilobits/sec or megabits/sec.

Bitrate determines the overall video quality and the file size. Files using higher bitrate result in better quality and larger file size.

As seen in the previous section, each pixel is represented using a set of bits. In case each pixel uses more bits, then the video would require higher bitrate.

Certain video scenes such as action sequences require a higher bitrate than still scenes such as scenery.

Resolution

Video resolution is the count of distinct pixels that constitute a video. A higher resolution video would have more pixels as compared to a low resolution one.

Video resolution is expressed as width × height in pixels. A SD (480p) video has 640×480 pixels, while an HD (720p) has 1280×720 pixels.

Now that you are familiar with basic concepts, can you guess what would be the size of a short HD video file of 10 seconds ? 🤔

Let’s do some quick calculations and compute the size.

Frames - 30 per second

Resolution of each frame - 720 p or 1280*720 pixels = 921600 pixels

Size of each pixel = 24 bits

Total size of each frame = Resolution of each frame X Size of each pixel

921600 x 24

22118400 bits

Size of 1 second video = Frames per second X Size of each frame

30 * 22118400

663552000

Size of 10 second video = 10 * Size of 1 second video

10 * 663552000

6635520000 or 663.552 Mb

663 Mb is too big for a 10 second video 🤯

Have you come across such a big file while recording a small video ?

The answer is no. But, how does your device generate small video files of 1-5 Mb for a 10 sec video ? The magic lies in a process known as video encoding.

Video Encoding

Video encoding converts a raw video file into a format that’s optimized for storage, transmission and playback. The encoding process also ensures that video quality is not degraded.

Video encoders/decoders are also known as Codec(Encoder-Decoder). Some popular codecs are: H.264, H.265 (HEVC), VP9, AV1, MPEG-2, and MPEG-4.

A raw video files goes through the following steps during encoding :-

Frame-by-Frame Analysis - The encoder goes through each frame and identifies the redundancies within each frame.

Temporal Compression - It removes the redundancies or repeated frames through compression.

Bitrate control - The encoder adjusts the bitrate depending on the frame complexity.

Compression - The encoder runs a complex compression algorithm that might either discard some data or completely retain the data during compression.

Output - The final output file contains the video, audio and other metadata.

Video decoding

Video decoding is the reverse process of encoding. The decoder extracts all the frames from the encoded file and plays the video.

Decoding happens in real-time as you play a video. Both encoding and decoding are CPU-intensive operations.

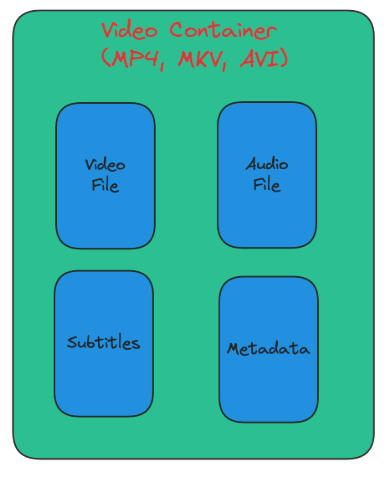

A video file is more than just a sequence of images. It must also contain audio, subtitles, thumbnails, watermark, etc. How are these things merged in a single file ? 🤔

Let’s understand this in the next subsection

Video Container

The video file, audio, subtitles and metadata are packaged in a file known as video container. The container is a wrapper that allows the playback software to synchronise the different files for a smooth playback.

Video containers have different file formats such as MP4, MKV, AVI, MOV, etc.

If I use QuickTime Player on Mac to record a video, it gets stored in the form of a .mov file. This file is specific to a Mac and it won’t run on non-Mac devices.

How do video streaming websites ensure that different devices play the video file without any additional software installation ? 🤔

We will now look at Video transcoding that helps streaming websites achieve this.

Video Transcoding

Let’s assume that I record a video using QuickTime Player in .mov format and I upload it to Youtube. Youtube recognises that the format is incompatible with other desktop, mobile or TV devices.

The end-user devices might be expecting a MP4 file for playback. Hence, Youtube’s system must first convert the file from .mov to .mp4 format.

This process of converting a video from one format to another format is known as Transcoding. A video goes through the following sequence of steps in transcoding :-

Decode the source video

Decompress the decoded video

Perform transcoding through the following series of steps:

Change bitrate if required

Modify the Frames per second

Change the resolution (1080p to 720p)

Encode and compress the video in a different format (.mp4 or .avi)

Output the transcoded video and store it in a permanent storage

Once the transcoding steps complete, the video then becomes ready for streaming. Transcoding happens asynchronously and user is notified after its completion.

Now that you are familiar with basic concepts of video streaming, let’s understand what challenges video streaming services face.

Video streaming challenges

A few seconds of entertainment for users comes at the expense of hours of engineering efforts. Streaming companies strive to continuously innovate and provide a smooth user experience.

Here are few common challenges that companies like Youtube, Netflix, Meta face :-

User experience - It’s essential to provide a seamless user experience without any glitch to millions of users simultaneously.

Performance - Upload and download latency are key indicators or success criteria for any streaming service. No user would wait for minutes for a video to stream.

Scalability - Applications such as Facebook or Instagram Live allow celebrity users to connect with millions of users simultaneously. Handling millions of connections and delivering real-time experience is a hard problem.

End-user devices - Streaming services have to support a multitude of devices. Every device has its own OS, hardware and constraints. Companies have to ensure compatibility with all the end-user devices.

In my next article, I will discuss few real-world case studies and how each company has tackled different set of challenges.

Let’s summarise and revisit the concepts that you have learnt so far.

Conclusion

A video captured by a camera is a sequence of images. Each image is known as a frame and it captures 30-60 frames per second.

A frame is a collection of identical square shaped units called as pixel. A pixel contains the colour information for the portion of the image it represents.

A video has a resolution standard that defines how many pixels it uses. Higher resolution (High definition 1280p) captures more granular details and leads to better picture quality.

Bitrate determines the amount of data used to represent a pixel. Bitrate needs to be adjusted based on the frames and the video resolution.

A raw video file is large in size and goes through encoding and compression that reduces the file size. Encoding optimizes a video file for transmission and playback.

Streaming companies use a process called Transcoding that converts one video file format into another. This ensures file compatibility with different devices. The process also adjusts the bitrate and the resolution.

Should Transcoding be a synchronous process given that it’s compute-heavy or should it be asynchronous ? Leave your thoughts in the comments below.

Stay tuned for the next article where we’ll understand the technologies powering the streaming services.

Before you go:

❤️ the story and follow the newsletter for more such articles

Your support helps keep this newsletter free and fuels future content. Consider a small donation to show your appreciation here - Paypal Donate