XFaaS: Meta's Scalable and Cost-efficient Serverless Platform

XFaaS: Addressing Key Challenges in Serverless Computing

Have you ever wondered how you could handle trillions of requests without managing servers? Well, XFaaS, Meta's serverless platform, processes trillions of requests daily in a cost-effective manner.

In the past decade, the adoption of serverless platforms has grown exponentially. Cloud providers such as AWS, Azure and GCP (Google Cloud Platform) have developed serverless platform for their customers.

FaaS, which stands for Function as a Service, is a popular choice for developers because it's a serverless way to write and deploy code. With FaaS, they no longer need to manage the infrastructure and only pay for the compute resources they use.

However, the ease of use comes at an additional cost for the Cloud providers. They have to deal with challenges of resource utilization and cost optimization. In addition, they need to handle the scale, fault tolerance and resource isolation in the cloud.

Recently, Meta published a paper describing the details of XFaaS. XFaaS has achieved CPU utilization of 66% that’s higher than the industry average as per anecdotal knowledge. They introduced optimizations that resulted in faster responses & efficient resource usage across datacenter regions. Further, they used a novel technique similar to TCP’s congestion control to ensure downstream service protection.

In this article, we'll dive deep into XFaaS and how it solves the different bottlenecks. We'll cover the following points:-

Working of FaaS platform & its challenges.

How XFaaS addressed the challenges.

XFaaS architecture

XFaaS’s performance on production workloads.

With that, let’s begin by understanding the basics of FaaS platform & its challenges.

Working of FaaS platform & its challenges

Beginners might think "serverless" means there are no servers at all. Not quite! It just means developers don't have to deal with them.

The platform takes care of the servers behind the scenes, letting developers focus on their code (the business logic).It's like having a helper for your computer - they handle the server stuff, you just write the code!

FaaS platforms enable the developers to develop and deploy functions, also called lambdas. The platform provides flexibility to use different languages like C++, Java, Python, GoLang, and so on.

The below diagram shows high-level working of FaaS platforms :-

Here are some benefits of FaaS platforms :-

Pay-as-you-go - Only pay for the compute used by your functions. You no longer need to worry about servers remaining idle.

Scalability - Platform scales and handles the increasing load.

However, these benefits come with additional costs for the owners of FaaS platforms. Behind the scenes, the platforms still need to manage servers to handle the client requests.

Let’s now look at some common challenges that FaaS platforms face.

Cold Start Time

When a client sends a request to a FaaS platform, the platform performs several tasks before actually executing the request. This creates an overhead for each request, also known as cold start time, which can impact the overall latency and performance.

Cold Start Time Breakdown

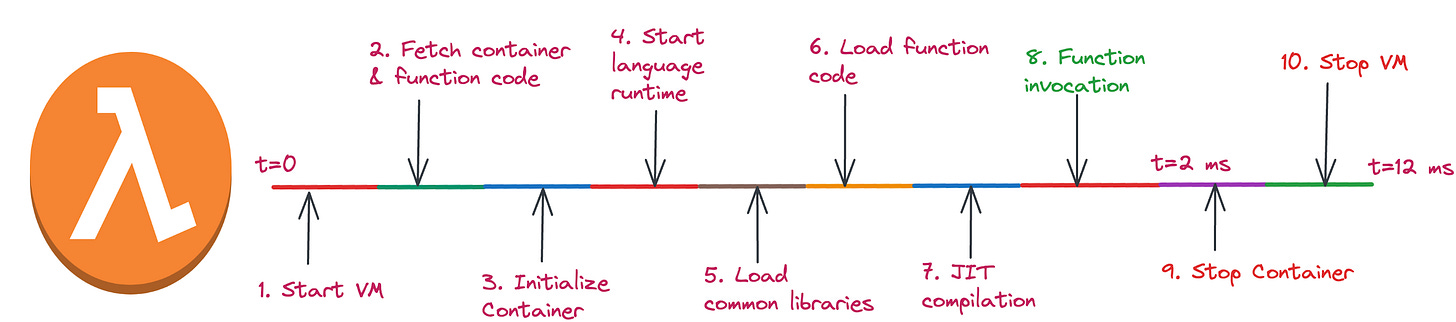

The below diagram illustrates the time spent by server while responding to the request :-

As the diagram shows, processing a request involves 10 steps. The first 7 steps happen before the actual function execution.

Idle Time After Execution

After executing the function, the server waits for a period (T) before shutting down (steps 9,10). Here's a look at the downsides associated with different values of T :-

T is too small - If the server receives another request too soon, it won't experience a cold start. However, if there's a delay, the server will have to perform the cold start again, impacting subsequent request performance.

T is too large - If T is too long and the server doesn't receive another request quickly, it will be underutilized, wasting resources.

Load spikes

FaaS platforms need to handle a wide variety of workloads. Each workload has unique requirements for CPU, memory, and execution time.

The platform doesn't receive a consistent number of requests each day. There can be sudden spikes due to offline computation jobs at certain times. For example, Meta's XFaaS platform experiences a peak-to-trough ratio of about 4.3 for function calls.

The following diagram shows how the load varies on Meta's XFaaS platform:-

To handle these load spikes, cloud providers might consider over-provisioning servers. However, this approach leads to underutilized servers during low-traffic periods, which increases the overall platform cost.

Overloading Downstream Services

Functions running on a FaaS platform often rely on several other services, such as microservices, shared databases, queueing systems, and data warehouses.

The large scale of a FaaS platform can overload these downstream services if they're not properly scaled. This can be critical if the downstream services handle features that directly impact user experience.

The following illustration shows how a FaaS platform's scaling can affect user-facing requests :-

In this example, the FaaS platform is concurrently processing requests that write to a News Feed database. If the database becomes overloaded, it can slow down read operations, ultimately impacting user availability. (This is a simplified illustration)

It's essential to implement safeguards to prevent downstream services from overloading. This ensures that critical user workflows remain unaffected.

How XFaaS addressed the challenges ?

Cold Start Time Optimization

To eliminate cold start overhead, XFaaS implements the concept of a Universal Worker. In this model, every worker (server) can execute any function immediately, without the need for cold start.

XFaaS uses the following techniques to optimize the cold start time :-

Function code pre-population - XFaaS categorizes workers into namespaces based on programming languages. Each namespace corresponds to a specific language. The platform proactively pushes function code to the servers' SSDs and keeps the language runtime running in the background. When a function is invoked, the code is loaded directly from the SSD and executed immediately.

Concurrent function execution - XFaaS executes functions within a single Linux process. This ensures a high degree of resource and data isolation, along with trust between functions.

Cooperative JIT compilation - When a new version of a function's code is encountered, one server gathers profiling data for Just-In-Time (JIT) compilation. This data is then shared with other servers, allowing them to skip the JIT compilation step for that specific code. To further improve the efficiency of the JIT code cache, only a subset of functions are executed on each server.

Handling Load Spikes

Since XFaaS's provisioned capacity isn't enough to handle peak traffic, it utilizes several optimization techniques for managing these spikes.

Her’s how XFaaS tackles increasing load :-

Time-shift computing - It defers the execution of delay-tolerant functions to off-peak hours. Every function has a criticality and a deadline. The deadline ranges from few seconds to 24 hours.

Global dispatch - To distribute the load evenly and leverage available resources across geographically diverse locations, XFaaS dispatches function execution across different datacenter regions.

Quota - Each function has a predefined quota, and execution is throttled (slowed down) once it reaches the limit. This helps prevent resource exhaustion and ensures fair allocation during peak loads.

Protecting Downstream Services

To safeguard downstream services from overload, XFaaS implements the following techniques :-

Adaptive concurrency control - This feature establishes a limit on the number of functions that can execute concurrently. If traffic spikes suddenly, XFaaS gradually increases the limit (by a factor of 20%). This allows downstream services to warm up their caches and handle the increased load effectively.

Back-pressure signal - If a downstream service becomes overloaded, it can throw a back-pressure exception. XFaaS then responds by slowing down the execution of functions and potentially deferring non-critical tasks. This helps prevent downstream services from becoming overwhelmed.

Now that you have learnt about the XFaaS’s techniques to address the challenges, let’s delve deeper into its architecture.

XFaaS architecture

The architectural components can be divided into following two categories :-

Core components - This consists of components in the critical path of function execution. All the components in the above box i.e Submitter, QueueLB, DurableQ, etc are core components.

Central Controllers - The components outside of the box are Central Controllers. These components are responsible for activities such as traffic distribution, load monitoring, config management, etc.

Let’s now understand the function execution flow in detail.

Clients submit the function along with its arguments to the Submitter.

The Submitter consults the Central Rate limiter to check the throttling limit. It then forwards the function to the QueueLB.

The QueueLB balances the load among several DurableQs. It gets the routing policy from the Configuration Management System. It then decides to distribute the load within and across the datacenter regions.

The DurableQ is a stateful component. It persists the function data in a highly-available and a sharded database. It’s responsible for ordering the functions based on criticality and execution time.

The Scheduler retrieves the functions from the DurableQ periodically. It’s main responsibility is to determine execution order of functions based on criticality, execution time and capacity quota.

The Scheduler receives the traffic matrix from the Global Traffic Conductor. Based on the traffic matrix, it then decides whether to pull the functions from DurableQ of different datacenter regions.

The Scheduler uses in-memory FuncBuffers to store each function. It fetches the top functions from FunBuffer and places it in the RunQ.

The WorkerLB then receives the functions from the RunQ. It then delegates the function to one of the Worker in the Worker pool.

The Locality Optimizer splits the functions and workers into locality groups. It does this to improve the cache-hit rate of JIT code cache.

Finally, the Utilization Controller monitors worker’s utilization. It then adjusts the Rate of invocation of functions. For eg:- If the workers are overloaded, then the Scheduler stops scheduling the functions.

XFaaS’s performance

The performance of XFaaS was measured on the production traffic. Several parameters such as CPU utilization, impact of locality groups, downstream service protection, etc were used to measure the success.

CPU Utilization

From the above graph, we can infer the following :-

XFaaS uniformly distributes the function execution despite the spiky traffic.

It achieves a daily high CPU utilization of 66%. Based on anecdotal knowledge, this is higher than other cloud platforms.

The utilization level stays within a narrow band indicating efficient resource utilization in all the regions.

Locality groups

They conducted an experiment to evaluate the impact of locality groups. The workers in production were divided into two partitions - a) With locality group b) Without locality group.

It was observed that workers in locality group consumed 11.8 % and 11.4% less memory at P50 and P95 respectively. Thus, locality groups were effective at reducing worker’s memory consumption.

As seen from the below graph, the memory consumption of workers stays at a stable level.

Cooperative JIT Compilation

To measure the impact of cooperative JIT compilation, they restarted workers with and without it. JIT compilation boosted worker performance to peak within 3 minutes, compared to 21 minutes without it.

The below graph shows the results of experiment :-

Downstream service protection

The team tested XFaaS's protection for downstream services during two real-world incidents. The first involved a social-graph database (TAO) connected to a write-through cache (WTCache) whose read & write availability dropped.

During the incident, XFaaS’s back-pressure mechanism kicked in. It slowed down the function execution & overload of WTCache.

The following diagram shows how the executions of Function A & B reduced when the downstream service degraded.

The second incident involved TAO’s migration where its capacity was under-sized in one of the datacenter regions. After the migration, TAO became overloaded.

XFaaS slowed down the execution of 200 unique functions. This resulted in 40% reduction in overall TAO traffic. This limited the end-user impact without any impact on the latency or availability.

Conclusion

FaaS platforms are gaining adoption exponentially. Developers prefer FaaS platforms due to its pay-as-you-go model, scaling capabilities & absence of capacity planning.

However, the benefits that FaaS platforms provide comes at an additional cost for cloud providers. It faces common challenges like Cold start time, handling spiky loads and impact on downstream services.

Meta developed XFaaS, a hyperscale & low-cost serverless platform. It addresses the common challenges and handles load from diverse set of workloads.

It uses the below techniques to address each of the challenges :-

Cold start time - It uses the model of Universal worker, Cooperative JIT compilation and locality groups to almost eliminate the cold start time.

Handling spiky loads - It defers the execution of non-critical functions to a later time. Further, the function execution is distributed across different datacenter regions.

Downstream service impact - It employs back-pressure signal mechanism to slow down the pace of function execution. Further, it also uses adaptive concurrency control to prevent sudden overload on downstream services.

The above techniques have enabled XFaaS to achieve high CPU utilization, reduced costs and handle spiky loads. Existing cloud providers can learn and implement techniques such as cold start time optimization to further improve the customer experience.

One of the key learnings from XFaaS is Meta’s data-driven approach to problem solving. They identified the characteristics of different workloads, & estimated the parameters like CPU, Memory and Execution time.

The design decisions such as deferring function execution were taken after carefully considering the existing workload characteristics. Further, they also emphasised importance of efficiently utilizing resources in different datacenter regions.

What features are missing from current public cloud FaaS platforms? What can they learn from XFaaS to improve their offerings? Share your thoughts in the comments below!

Also, What sparks your interest the most? Cast your vote in the poll to decide what we explore next.

Thanks for reading the article!

Before you go: